DQ-GAT: Towards Safe and Efficient Autonomous Driving with Deep Q-Learning and Graph Attention Networks

Video

Abstract

Autonomous driving in multi-agent dynamic traffic scenarios is challenging: the behaviors of road users are uncertain and are hard to model explicitly, and the ego-vehicle should apply complicated negotiation skills with them, such as yielding, merging and taking turns, to achieve both safe and efficient driving in various settings. Traditional planning methods are largely rule-based and scale poorly in these complex dynamic scenarios, often leading to reactive or even overly conservative behaviors. Therefore, they require tedious human efforts to maintain workability. Recently, deep learning-based methods have shown promising results with better generalization capability but less hand engineering efforts. However, they are either implemented with supervised imitation learning (IL), which suffers from dataset bias and distribution mismatch issues, or are trained with deep reinforcement learning (DRL) but focus on one specific traffic scenario. In this work, we propose DQ-GAT to achieve scalable and proactive autonomous driving, where graph attention-based networks are used to implicitly model interactions, and deep Q-learning is employed to train the network end-to-end in an unsupervised manner. Extensive experiments in a high-fidelity driving simulator show that our method achieves higher success rates than previous learning-based methods and a traditional rule-based method, and better trades off safety and efficiency in both seen and unseen scenarios. Moreover, qualitative results on a trajectory dataset indicate that our learned policy can be transferred to the real world for practical applications with real-time speeds.

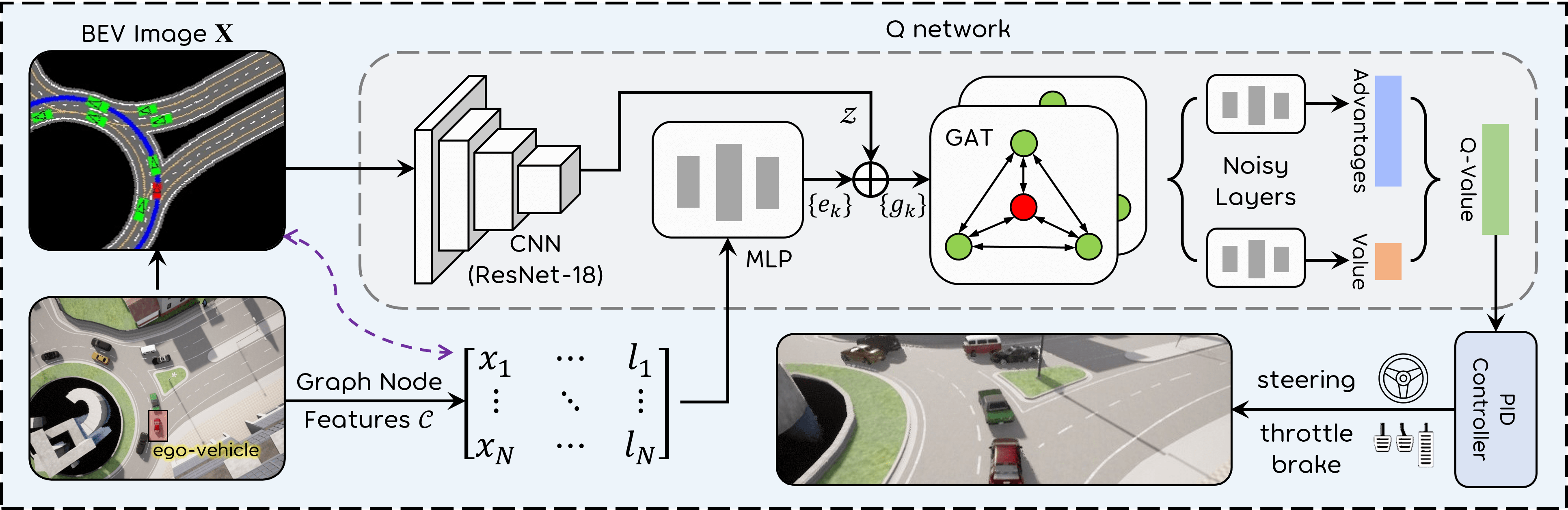

System Architecture

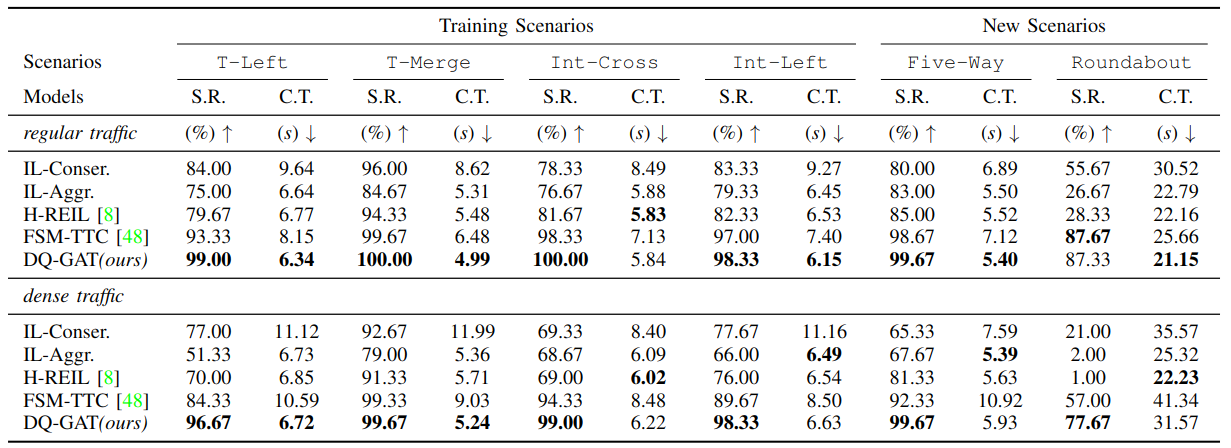

Comparative Results

The bold font highlights the best results in each column.